Chapter 1. Data science and artificial intelligence

[presentation] [book]

- 1.1 Statistics, data science, machine learning, and artificial intelligence

- 1.2 General process of data analysis

- 1.3 Data classification

- 1.4 Software programs for data analysis

- 1.5 References

- 1.6 Exercise

CHAPTER OBJECTIVES

1.1 Statistics, data science, machine learning, and artificial intelligence

[presentation]

Statistics

The probability concept and inference were introduced in the 8th to 13th centuries. Arab mathematicians Al-Khalil (717–786), Al-Kindi (801–873), and Ibn Adlan (1187–1268) later developed the early statistical inference using sample frequency analysis. The mathematical foundations of modern statistics were laid from the 17th to the early 19th century, with the development of probability theory by Gerolamo Cardano, Blaise Pascal, and Pierre de Fermat. Adrien-Marie Legendre first described the method of least squares in 1805. In the late 19th century, Francis Galton and Karl Pearson transformed statistics into a rigorous mathematical discipline used for analysis in science, industry, and politics. Galton introduced the concepts of standard deviation, correlation, and regression analysis. Pearson developed the product-moment correlation coefficient and the method of moments to fit distributions to samples. In the early 20th century, Ronald Fisher first used the term 'Statistics.' All these research are related to estimating the parameter of an unknown population, and it is called inferential statistics.

Today, statistical methods are applied in all fields that involve decision-making for making accurate inferences from a collated body of data and for making decisions under uncertainty based on statistical methodology. The use of modern computers has expedited large-scale statistical computations and has also made possible new methods that are impractical to perform manually. Modern statistics is the discipline that efficiently collects data, summarizes data, and analyzes it to make scientific decisions using various probabilistic models for decision-making in uncertain situations.

Data Science

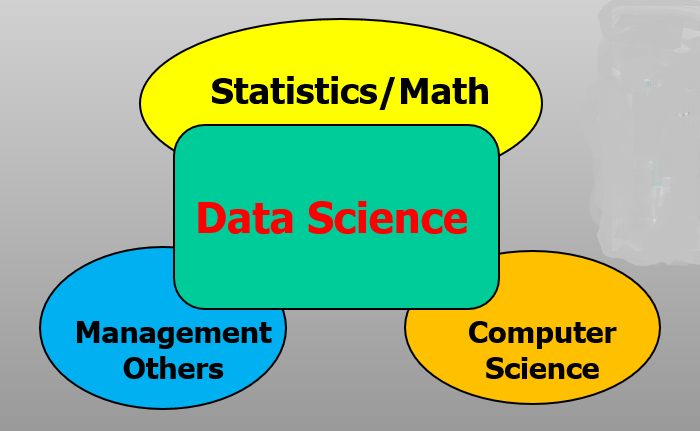

The development of computer and communication technologies is now intensified with the Internet of Things (IoT) in which all electronic devices are connected to computers via the Internet. In the near future, we expect a society that utilizes artificial intelligence, and IoT which will be radically different from the present, such as an automatic car, a robot doctor, and a robot teacher, and is called the fourth industrial revolution society. The development of technologies has created massive data, called Big Data, which includes structured data and unstructured data that were unimaginable in the past. Typical examples of big data include data from Google's search records, social media messages by mobile phones, weblogs by internet connections, and telephone records of global telecom companies. Big data is expected to grow and increase exponentially in the future. and hyper-forecasting is also expected to be possible. The analysis of big data is so enormous and diverse in the amount of data that it can not be fully utilized just by traditional statistical approaches. We must apply simultaneously theories of statistics, computer science, mathematics, management, and related disciplines to analyze and utilize big data. Data science is a new area of study in which statistics, mathematics, computer science, and other disciplines are fused to analyze and utilize the big data that emerged this century. Although data science is closely related to statistics, it is not statistics itself. While statistics provides insights to help you make the right decisions, data science goes one step further and focuses on creating the best possible solutions to solve problems. To do data science, you need to have business sense, programming, statistics, and mathematics.

The success or failure of each individual, group, company, and even country depends on how big data is utilized efficiently. Many examples of data science analyze big data and apply it to reality.

Methodologies that are used in the analysis of big data include many traditional statistical methods such as estimation, testing hypotheses, multivariate statistical analysis, and linear models. Recent theories in mathematics, such as neural network, supporting vector machines, and theories in computer science, such as distributed computing, machine learning, and artificial intelligence, are also used. Since data science is a fusion of studies, understanding several disciplines is not easy, but it would be generally feasible for those with a talent for mathematics. Those who studied data science could be leaders of the Fourth Industrial Revolution society.

Data Science, data mining, machine learning, and artificial intelligence

Machine learning has been used extensively, especially in computer science. Instead of humans creating models and analyzing data, the computer automatically learns rules from data to create a software program that solves problems. Unlike data mining, the subject that uses rules or patterns in machine learning is not a person but a computer. However, most of the techniques used in machine learning are similar to those used in data mining. In addition, while data mining algorithms are relatively transparent in creating rules, machine learning algorithms are often like a black box, making it difficult to know why decisions were made. What data scientists do is to analyze data and to create such models. Collecting and analyzing data is very important to data scientists because creating a model is relatively easy if good data is prepared. However, if the data is a mess, no matter how good the algorithm is, creating a model that works properly is impossible.

Artificial intelligence (AI) is an extension of machine learning that refers to machines, that have the intelligence to imitate human intelligence and perform complex tasks like humans. The artificial intelligence utilizes many techniques in the data mining and machine learning, especially the artificial neural network model is the most important tool for artificial intelligence. Deep learning, which is often seen these days, is a simulation algorithm that trains the artificial neural network.

In the early days of artificial intelligence, efforts were made to create machines similar to humans by imitating the human brain and way of thinking. In 1955, Marvin Minsky and others at Dartmouth College in the United States built the first neural network, the SNARC system. Around the same time, computer scientist Viktor Glushkov in the Soviet Union created the All-Union Automatic Information Processing System (OGAS). With the development of computer science, rather than implementing human intelligence, efforts were made to solve real-world problems using machines efficiently. However, due to the limitations of information processing capabilities at the time, applications of the AI were limited. In 1974, Paul Warboss proposed a back-propagation algorithm that could solve a multilayer neural network, and research on artificial intelligence using multilayer neural network models was actively conducted, resulting in visible results such as character recognition and primitive speech recognition, but the algorithm sometimes failed to find a solution. In 2006, Geoffrey Hinton announced the deep learning algorithm to solve the problem of the back-propagation algorithm, which became the higher-level concept of artificial neural network. In particular, in 2012, Alex Krizhevsky and Ilya Sutskever built AlexNet, a convolutional neural network architecture, and won the computer vision competition called "ILSVRC" with overwhelming performance, and the deep learning became an overwhelming trend surpassing the existing methodology. In 2016, Google DeepMind's AlphaGo popularized deep learning methods and showed results that surpassed human levels in several fields.

Artificial intelligence began to change significantly in 2022 with the emergence of generative AI. OpenAI's ChatGPT and Drawing AI, which are representative generative AI, have finally begun to be applied to actual personal hobbies and work applications, and the practical application of AI, which had seemed like a dream, has finally begun. The background of this generative AI was the transformer structure. However, generative AI has led to active discussions about AI, and among them, theories of caution and threats to AI have also begun to emerge.

1.2 General process of data analysis

| Table 1.2.1 Composition of this book | |

|---|---|

| Chapter 1. Data science and artificial intelligence |

|

| Chapter 2. Visualization of data | Chapter 6. Supervised machine learning for categorical data |

| Chapter 3. Summary of data and transformation | Chapter 7. Supervised machine learning for continuous data |

| Chapter 4. Probability and probability distribution | Chapter 8. Unsupervised machine learning |

| Chapter 5. Testing hypothesis and regression | Chapter 9. Artificial intelligence and other applications |

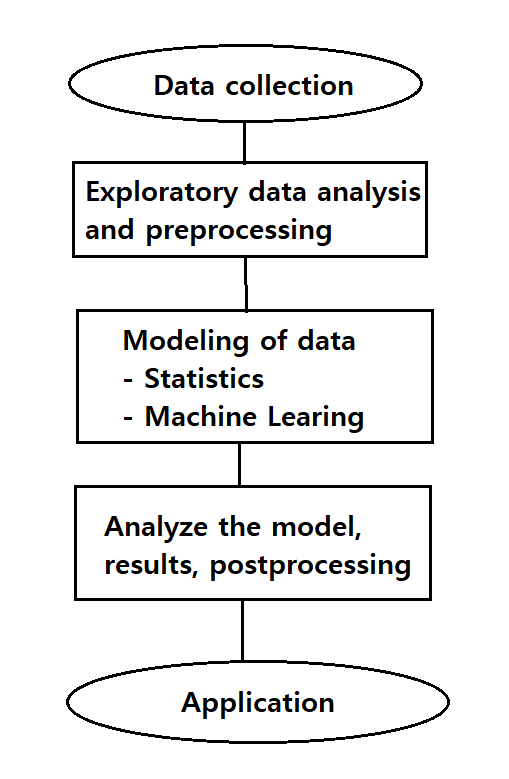

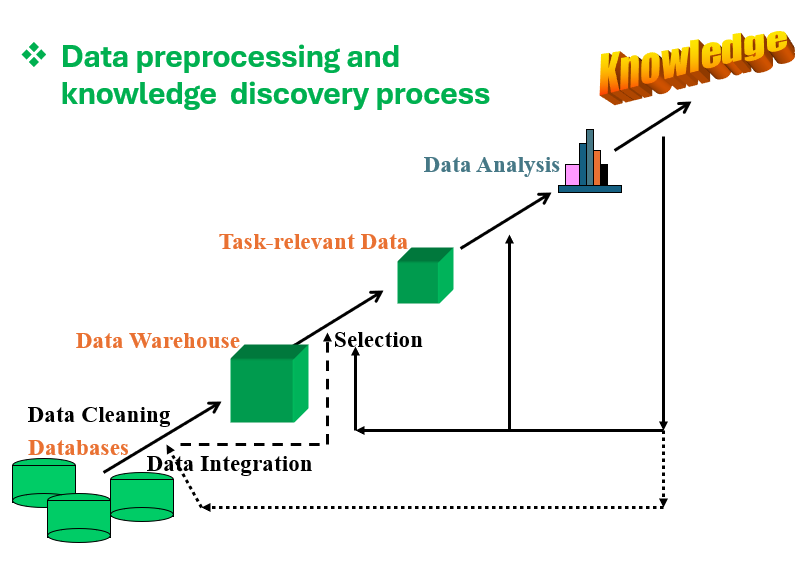

The collected data can take many forms, such as structured data with numbers and characters, spreadsheet files, or data scattered across multiple databases and unstructured data with texts and documents. Exploratory data analysis and preprocessing, cleaning the collected data, and making a data warehouse are the first tasks in data analysis. We sometimes select only task-relevant data when the data is so big and include unnecessary data for our data analysis. Data visualization to explore data is discussed in Chapter 2. Chapter 3 discusses preprocessing, removes noise or duplicate data, and performs normalization, discretization, or mathematical transformations. Chapter 3 also discusses selecting features suitable for data analysis and dimension reduction. Data preprocessing is tedious and time-consuming in the overall data analysis task, but we can obtain useful information if preprocessing is done well. Software programs are essential for these processes.

Data are usually classified based on their attributes for later analysis, and Section 1.3 discusses data classification. Building a model for knowledge discovery in data is the primary function of data science, machine learning, and artificial intelligence. We review basic probability theory and statistical analysis techniques in Chapters 4 and 5, such as testing hypotheses and regression analysis to build a model for data analysis. The data modeling process determines which analysis method is appropriate for the given problem and establishes a model. In Chapters 6 and 7, supervised machine learning models and classification analysis discuss models or functions that describe and distinguish classes or concepts for future prediction, such as decision trees, Bayes classification functions, neural networks, support vector machines, and ensemble models. Unsupervised learning models and clustering analysis are discussed in Chapter 8. Clustering models form new classes when the class label is unknown by grouping data with the principle of maximizing the intra-class similarity and minimizing the interclass similarity

Post-processing includes evaluating the models, analyzing results such as visualizing or extracting, summarizing, and explaining only meaningful analysis results to use the data analysis results for decision-making efficiently.

Chapter 9 introduces the application of data science to artificial intelligence, text mining, web mining, etc. Additional study for advanced theories in Statistics, Mathematics and Computer Science is necessary to understand Data Science in depth.

1.3 Data classification

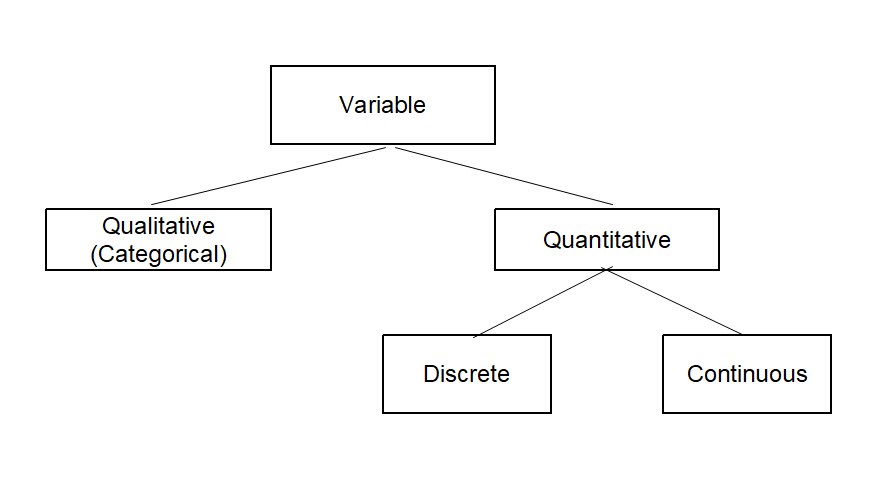

Variables such as gender, marital status, and eye color are called qualitative variables, and their data are called qualitative data. Most qualitative variables are categorical, yielding non-numerical data with categories. Values of a qualitative variable are sometimes coded with numbers, for example, zip codes representing geographical locations or '1' for males and '2' for females. We can not do arithmetic calculations with numbers such as zip codes and coded gender. Documents that consist of texts are also qualitative data.

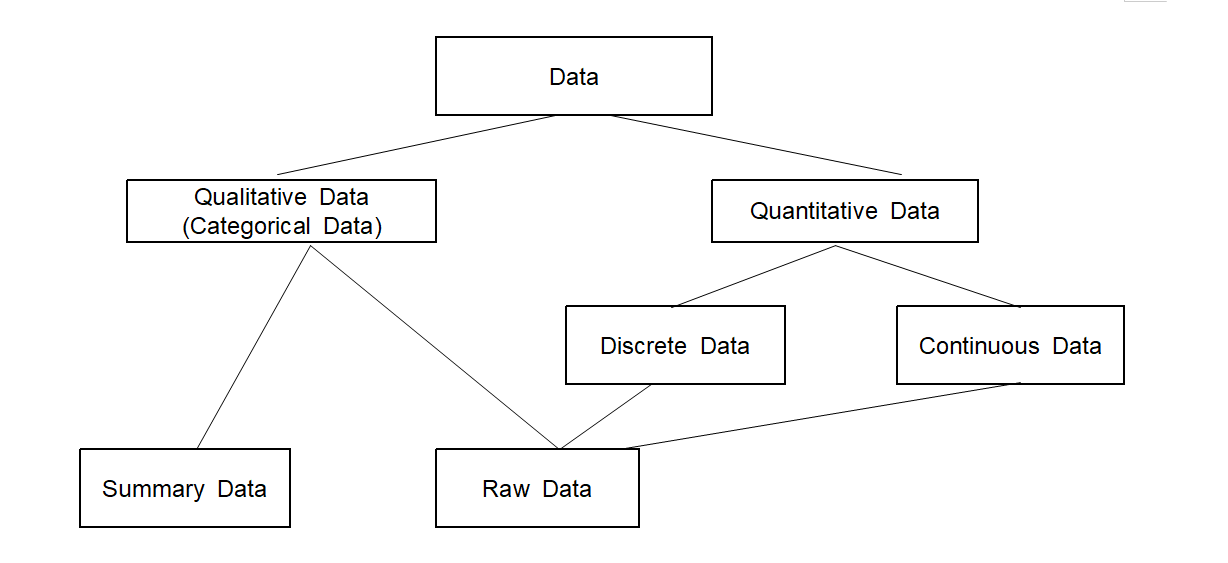

Variables such as height, weight, and years of education are called quantitative variables which yield numerical data. Quantitative variables can be classified as either discrete or continuous. A discrete variable is one whose possible values can be counted, which implies that it is either finite or countably infinite. A discrete variable usually involves a count of something, such as the number of cars owned by a family or the number of students in a class. A continuous variable is a variable whose possible values are uncountable real numbers. Typically, a continuous variable involves measureing something, such as a person's age, the weight of a newborn baby, or the fuel mileage of a car. Suppose we measure the height of a student. In that case, we usually write it with an integer value, such as 180cm, which looks like a finite number, but the height is not a discrete variable because 180cm is an approximation of an actual real number such as 180.123456 and therefore, the height is a continuous variable. We can transform quantitative data into categorical data by dividing all possible values of a quantitative variable into non-overlapped intervals. For example, we can transform age data into categorical data using a transformation such as '< 30', '≥ 30 ~ < 40’, '≥40 ~ < 60', '≥ 60'. Types of variables are summarized graphically in <Figure 1.3.1>.

The qualitative data can be divided into either raw data or frequency table data to do data analysis using a software program. For example, if ten students in an elementary school are examined based on their gender, such as follows,

male, female, male, female, male, male, male, female, female, male.

this data is referred to as the raw data of the gender variable and can be arranged in a single column

of Excel sheet as Table 1.3.1.

| Table 1.3.1 Raw data by gender survey | |

|---|---|

| row | Gender |

| 1 | male |

| 2 | female |

| 3 | male |

| 4 | female |

| 5 | male |

| 6 | male |

| 7 | male |

| 8 | female |

| 9 | female |

| 10 | male |

We can give the name of this column as 'Gender' in the package program, which is called a variable name, and their possible values, ‘male’ and ‘female’, are called value labels of the variable. If you count the number of male students and female students in Table 1.3.1, there are six male students and four female students, and this can be summarized in Table 1.3.2, which is called A frequency data of the gender variable. Excel typically uses this kind of frequency table data for their graphs.

| Table 1.3.2 frequency table data for the gender | |

|---|---|

| Gender | Number of Students |

| Male | 6 |

| Female | 4 |

Notation of data

1.4 Software programs for data analysis

However, software programs such as SPSS and SAS, etc., are very costly, and R and Python are not easy to learn requiring an understanding of a computer language. Also, the software programs are not specialized for learning complicated data science theories. It makes authors develop a web-based software program, called 『eStat』, which is specialized for learning theories and data processing practice. 『eStat』 is also free of charge and has a user-friendly interface, especially for beginners to study statistics and data science, 『eStat』 includes many useful dynamic graphical tools for visualizing data and machine learning techniques. We can study theories and practice them simultaneously. Since 『eStat』 is specialized for learning data science, other software programs are also essential to practice big data analysis. R and Python, also free software programs, are adopted in this book for practicing big data analysis.

Basic Operation of 『eStat』

[pdf]

[Video]

Data science software offers numerous benefits that significantly improve the efficiency and effectiveness of the data analysis process. First, it provides powerful tools for data manipulation, visualization, and statistical analysis, allowing users to extract meaningful insights from complex data sets. Additionally, these software programs often integrate machine learning algorithms, allowing organizations to build predictive models to facilitate informed decision-making. Furthermore, many data science programs facilitate tem collaboration by providing a centralized environment for sharing code, results, and visualizations. It does not only streamlines workflows but also fosters innovation through collective problem-solving. Ultimately, leveraging data science software allows businesses to leverage the potential of their data fully to improve operational efficiency and competitive advantage. However, data science software faces challenges such as integrating diverse data sources, ensuring data quality, managing algorithmic complexity, and addressing ethical concerns such as privacy and bias. Overcoming these obstacles requires skilled personnel, practical tools, and a commitment to ethical practices.

1.5 References

Some good starter books for data science include:

"Data Science for Beginners" by Andrew Park, Independently published, 2020.

"The Data Science Handbook" by Field Cady, Wiley, 2017.

"R for Data Science" by Hadley Wickham and Garret Grolemund, O'Reilly, 2017.

"An Introduction to Statistical Learning: With Applications in R" by Gareth James, Daniela Witten, Trevor Hastie and Robert Tibshirani, Springer, 2017.

"A First Course in Statistical Programming with R" by W. J. Braun and D. J. Murdoch, Cambridge University Press, 2008.

"An Introduction Using R" by M. J. Crawley, John Wiley & Sons, 2005.

"Statistical Computing with R" by M. L. Rizzo, Chapman and Hall/CRC, 2007.

"Data Science from Scratch: First Principles with Python" by Joel Grus, O'Reilly, 2015.

"Python for Data Analysis" by Wes McKinney, O'Reilly, 2022.

"The Art of Data Science: A Guide for Anyone who Works with Data" by Roger D. Peng and Elizabeth Matsui, Lulu.com, 2016.

"Storytelling with Data" by Cole Nussbaumer Knaflic, Wiley, 2015.

"Think Stats: Exploratory Data Analysis" by Allen B. Downey, O'Reilly, 2015.

"The Hundred-Page Machine Learning Book" by Andriy Burkov, Andriy Burkov, 2019.

"Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow" by Aurélien Géron, O'Reilly, 2017.

"Grokking Deep Learning" by Andrew W. Trask, Manning, 2019.

"Big Data: A Revolution That Will Transform How We Live, Work, and Think" by Viktor Mayer-Schönberger and Kenneth Cukier, Houghton Mifflin Harcout, 2013.

Some advanced data science book includes:

"Probability and Statistical Inference" by R. V. Hogg and E. A. Tanis, Prentice Hall, 2009.

"Applied Multivariate Statistical Analysis" by Richard Arnold Johnson and Dean W. Wichern, Pearson Prentice Hall, 2007.

"Introduction to Data Mining" by P. N. Tan, M. Steinbach, and V. Kumar, , Addison-Wesley, 2005.

"The Elements of Statistical Learning: Data Mining, Inference, Prediction." by T. Hastie, R. Tibshirani, and J. H Friedman, Springer, 2nd Edition, 2009.

"Pattern Classification" by R. O. Duda, P. E. Hart, and D. G. Stork, Wiley-Interscience, 2nd Edition, 2000.

"Pattern Recognition and Machine Learning" by C. M. Bishop, Springer, 2nd Edition, 2007.

"Deep Learning with Python" by François Chollet, Manning, 2018.

"Data Science with Python and Dask" by Jesse Daniel, Manning, 2019.

"Pattern Recognition" by S. Theodoridis and K. Koutroumbas, 4th Edition, Academic Press, 2008.

"Statistical Pattern Recognition" by A. R. Webb, Wiley, 2nd Edition, 2002.

Some professional data science book includes:

"Build a Career in Data Science" by Emily Robinson and Jacqueline Nolis, Manning, 2020.

"The Data Science Handbook: Advice and Insights from 25 Amazing Data Scientists" by Carl Shan, Henry Wang, William Chen and Max Song, Data Science Bookshelf, 2015.

"Data Science for Business" by Foster Provost and Tom Fawcett, O'Reilly, 2013.

1.6 Exercise

1.1 Describe core technologies of the Fourth Industrial Revolution.

1.2 Explain what Statistics is.

1.3 Explain what Data Science is.

1.4 Explain what Machine Learning is.

1.5 Explain what Artificial Intelligence is.

1.6 Describe possible examples of Big Data.

1.7 Explain the qualitative data and the quantitative data.

1.8 Explain the discrete data and the continuous data.

1.9 Explain the raw data and the frequency summary data.

1.10 Which software programs are used for data science?